A wave is a periodic function \(f(x)\). This means that there is a fixed \(p\), called a period of \(f\), and for all \(x\) we have \(f(x + p) = f(x)\). We can change the period by an easy renormalization, so from now on all waves have period \(2 \pi \).

Obviously \(\sin (x)\) is periodic; that is why we fixed \(2 \pi \) as the period. Notice that \(\sin (kx)\) also has this period for any integer \(k\).

Given one wave \(f\), we can obtain new waves by translation. This is called changing the phase. Thus \(f(x - \delta )\) has phase \(\delta \).

The sum of waves is another wave. So we can obtain very general waves using formulas of the form \[\sum _{k = 0}^\infty A_k \sin (kx - \delta _k)\]

In the early part of the 1800’s, the great French mathematician Fourier declared that every wave can be uniquely written in this way. Moreover, given a wave, Fourier discovered an easy way to calculate the numbers \(A_k\) and \(\delta _k\). These numbers are called the amplitudes and phases of the wave harmonics.

The \(k = 0\) term in this formula is a constant and not very important. The \(k = 1\) term is the crucial term, giving the fundamental frequency of the wave. If we are dealing with a sound wave, this determines the note that we hear. The higher terms represent the harmonics of the wave: additional higher notes making up the sound. If \(k = 1\) represents a low C, the harmonics start an octave higher and give the notes \(C, G, C', E', G', B^{\flat '}, C''\) etc. These harmonics determine the quality of the sound, so we can distinguish the same note played by a flute, oboe, trumpet, etc. The human ear ignores the phases.

Fourier led an interesting life. During Napoleon’s invasion of Eqypt, he was the secretary of the academic branch of the army. While the rest of the army was doing whatever armies do, the academic branch was making tracings of hieroglyphics. Eventually the English threw the French out of Egypt, but the academic branch published those tracings and for the first time scholars in the West had specific examples of hieroglyphics instead of vague pictures. This eventually led to the decipherment of hieroglyphics.

Fourier’s assertion about waves was immediately challenged by Cauchy and others. They pointed out that the sine function is continuous and differentiable and thus only continuous and differentiable waves could be so represented. But many significant waves do not have these properties, including the square wave, the sawtooth wave, and others.

Cauchy had written a book on analysis, which contained the theorem that if \(f_k(x)\) are continuous functions and \(f(x) = \sum _{k = 1}^\infty f_k(x)\), then \(f(x)\) is also continuous. Cauchy’s proof was somewhat vague, and significantly did not contain the hypothesis that convergence is uniform. When Cauchy read Fourier’s manuscript, he advised Fourier not to publish because the book was not rigorous and contained false results. An academic discussion then began in France over the correct way to describe general waves.

But as sometimes happens in mathematics, the discussion was cut short by a spectacular theorem of Dirichlet, published in 1829. Dirichlet proved that the Fourier series of a piecewise differentiable function always converges pointwise to the function. So Fourier was correct and the theorem in Cauchy’s book was wrong. This led to the discovery of uniform convergence of series and far greater rigor in the subject. Over a century later, the University of Chicago published a “great books” series, with books by Plato, Aristotle, Darwin, Freud, and many others. Fourier’s book was in that series.

So far we have put no restrictions on our functions. Arbitrary functions can be ugly: nowhere continuous, nowhere differentiable, not integrable, etc. So we restrict to a class of functions that contains all the waves discussed so far, and yet allows us to do standard calculations rigorously.

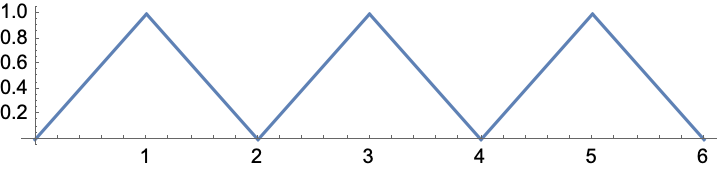

A function \(f(x)\) defined on the entire real line is said to be piecewise differentiable if for any finite interval \([a, b] \subset R\), the interval can be broken into a union of finitely many subintervals \([a, x_1], [x_1, x_2], \ldots , [x_n, b]\) such that \(f\) restricted to each subinterval \([x_i, x_{i + 1}]\) is differentiable. If \(f\) is periodic, it suffices to break the interval \([0, 2 \pi ]\) into subintervals in this way.

The above picture shows the idea; this graph has three differentiable pieces. However, more needs to be said about the behavior at the endpoints of the subintervals.

We say that \(f(x)\) is differentiable on the interval \([x_k, x_{k + 1}]\) if there is a differentiable function \(g(x)\) on a larger open interval which agrees with \(f\) on \((x_k, x_{k + 1})\) and thus on the interval except perhaps at the endpoints. Thus in the definition of piecewise differentiability, functions are allowed to have discontinuities at the division points. The values they take at these points can be entirely arbitrary. But there is a natural “left value” by taking the limit of \(f(x)\) as \(x\) approaches the division point from the left, and a natural “right value” by taking the limit of \(f(x)\) as \(x\) approaches the division point from the right, and indeed one-sided derivatives exist at these division points by using these values.

Nothing in the following sections depends on the value of \(f\) at points of discontinuity. We will assume in this article that \(f\) is assigned the average of its value from the left and its value from the right at these points.

In the previous section we used \(\sin (kx)\) but never mentioned \(\cos (kx)\). The reason is that \(\sin \) and \(\cos \) vary only by a phase: \[\sin (kx + {\pi / 2} ) = \sin (kx) \cos (\pi / 2) + \cos (kx) \sin (\pi / 2) = \cos (kx)\]

We can use this result to eliminate phases entirely at the cost of adding cosine terms. We get slightly nicer formulas by writing our original assertion using cosines rather than sines. So \[f(x) = \sum _{k = 0}^\infty \cos (kx - \delta _k)\] Now notice that \[\cos (kx - \delta _k) = \cos (kx) \cos (\delta _k) + \sin (kx) \sin (\delta _k) = a_k \cos (kx) + b_k \sin (kx)\] Here \[a_k = A_k \cos \delta _k\] \[b_k = A_k \sin \delta _k\] These are exactly the formulas which translate rectangular coordinates to polar coordinates in the plane. So if you know \(a_k\) and \(b_k\), plot the point \((a_k, b_k)\). Then \(A_k\) is the distance from this point to the origin and \(\delta _k\) is its angle. Going the other direction is just as easy.

Therefore instead of writing a Fourier series as we previously did, we can write \[f(x) = {{a_0} \over 2} + \sum _{k = 1}^\infty \Big ( a_k \cos (kx) + b_k \sin (kx) \Big )\]

Mathematicians always use this variation. Physicists prefer the first one because the Amplitude and Phrase have physical meaning. It is trivial to go back and forth between the two variants.

In the special case \(k = 0\), the term \(\cos (kx)\) is \(1\) and the term \(\sin (kx)\) is zero and thus the term \(a_k \cos (kx) + b_k \sin (kx)\) is just some constant. We call this constant \({a_0} \over 2\) rather than \(a_0\) for a reason that will soon be apparent.

We are about to explain Fourier’s amazing formula giving the \(a_k\) and \(b_k\) directly in terms of \(f(x)\). This formula involves just a little trigonometry. But we are lazy. Trigonometry becomes a lot easier if we use complex numbers, so we’ll do that.

Definition 1 If \(x\) is a real number, then \[e^{ix} = \cos x + i \sin x\]

Remark: This result is a theorem in most complex variable courses. But for these lectures we can take it as the definition of \(e\) to a purely imaginary power. The sole reason for introducing this definition is that \[e^{i(x + y)} = e^{ix} e^{iy}\] Moreover, this formula is just the addition formulas from trigonometry, as the short proof below shows. But the addition formulas are complicated and messy, while this exponential formula is easy.

Proof: \begin {align*} e^{i(x + y)} &= \cos (x + y) + i \sin (x + y) \\ &= \left ( \cos x \cos y - i \sin x \sin y \right ) + i \left ( \sin x \cos y + i \cos x \sin y \right ) \\ &= \left ( \cos x + i \sin x \right ) \ \left ( \cos y + i \sin y \right )\\ &= e^{ix} \ e^{iy} \end {align*}

In complex notation, the formula for a Fourier series becomes \[f(x) = \sum _{k = - \infty }^\infty c_k e^{ikx}\] where \(x\) is still real, but \(f(x)\) is allowed to take complex values and the \(c_k\) are complex numbers.

We are about to state Fourier’s formula computing the \(a_k\) and \(b_k\) once \(f(x)\) is known. We’ll work first over the complex numbers where the algebra is much easier, and then we’ll give the easy translation to the real case.

Theorem 1 If \(k\) and \(l\) are integers,

\[\left . {1 \over {2 \pi }} \int _{- \pi }^\pi e^{ikx} \overline {e^{ilx}}\ dx = \right \{ \begin {array}{ll} 0 & \mbox {if $k \ne l$} \\ \\ 1 & \mbox {if $k = l$} \end {array}\]

Proof: The integrand is \(e^{ikx}e^{-ilx} = e^{i(k -l)}x\) and so the integral is \(\left .{1 \over {2 \pi }} {{e^{i(k -l)x}} \over {i(k -l)}} \right |_{-\pi }^\pi \). This expression is zero because \(e^{i(k -l)x}\) takes the same value at \(-\pi \) and \(\pi \). Indeed \(\cos (k - l) x\) and \(\sin (k - l)x\) are periodic of period \(2 \pi \).

This argument fails if \(k = l\) because we cannot divide by zero. But in that case \(e^{ikx} \overline {e^{ikx}} = e^{ikx} e^{-ikx} = e^0 = 1\) and the integral is \({1 \over {2 \pi }} \int _{- \pi }^\pi 1 \ dx = 1.\)

Remark: By periodicity, we could just as well integrate from \(0\) to \(2 \pi \). It is traditional to integrate from \(-\pi \) to \(\pi \).

Theorem 2 (Fourier) The \(c_k\) are given by \[c_k = {1 \over {2 \pi }} \int _{- \pi }^\pi f(x) e^{- i k x} \ dx\]

Proof: Suppose \(f(x) = \sum _{m = - \infty }^\infty c_m e^{imx}\). Multiply by sides by \(\overline {e^{i k x}}\) to obtain \[f(x) \overline {e^{ikx}} = \sum _{m = - \infty }^\infty c_m e^{imx} \overline {e^{ikx}}\] Integrate both sides from \(- \pi \) to \(\pi \) and assume it is legal to integrate term by term, to obtain \[{1 \over {2 \pi }} \int _{- \pi }^\pi f(x) \overline {e^{ikx}} \ dx = \sum _{m = - \infty }^\infty c_m {1 \over {2 \pi }} \int _{- \pi }^\pi e^{i m x} \overline {e^{ikx}}\ dx\] By the orthogonality relations, the integrals on the right are zero when \(m \ne k\) and one when \(m = k\). So the above formula collapses to give \[{1 \over {2 \pi }} \int _{- \pi }^\pi f(x) \overline {e^{ikx}} \ dx = c_k\] QED.

Remark: Our worry about the rigor of integrating this series term by term will eventually be unimportant. We will simply define the \(c_k\) by this formula and then prove directly that the resulting series converges to \(f\).

Theorem 3 Suppose \(f(x)\) takes real values. Then the \(c_k\) computed using Fourier’s formula satisfy \[c_{-k} = \overline {c_k}\]

Proof Conjugate the formula \(c_k = {1 \over {2 \pi }} \int _{- \pi }^\pi f(x) e^{- i k x} \ dx\). Since \(f\) is real valued, it does not change, and so the only change on the right side is that \(e^{-i k x}\) becomes \(\overline {e^{- i k x}} = e^{i k x}\) and thus \(k\) changes sign. QED.

Theorem 4 (Fourier) If \(f(x)\) takes real values and we write \[f(x) = {{a_0} \over 2} + \sum _{k = 1}^\infty \left ( a_k \cos (kx) + b_k \sin (kx) \right )\] then \[a_k = {1 \over \pi } \int _{- \pi }^\pi f(x) \cos (kx) \ dx \hspace {.5in} b_k = {1 \over \pi } \int _{- \pi }^\pi f(x) \sin (kx)\] and \[c_k = {1 \over 2} \left ( a_k - i b_k \right )\]

Proof: We work in reverse. We have \[c_k = {1 \over {2 \pi }} \int _{- \pi }^\pi f(x) e^{- ikx} \ dx = {1 \over {2 \pi }} \int _{- \pi }^\pi f(x) \left ( \cos (kx) - i \sin (kx) \right ) \ dx\] \[ = {1 \over 2} \left ( a_k - i b_k \right )\] Then \begin {align*} f(x) &= \sum _{- \infty }^\infty c_k e^{ikx} \\ &= \sum _{- \infty }^\infty {1 \over 2} \left ( a_k - i b_k \right ) \left ( \cos (kx) + i \sin (kx) \right ) \\ &= {{a_0} \over 2} + {1 \over 2} \sum _{k = -1}^{-\infty } \left ( a_k - i b_k \right ) \left ( \cos (kx) + i \sin (kx) \right ) + {1 \over 2} \sum _{k = 1}^\infty \left ( a_k - i b_k \right ) \left ( \cos (kx) + i \sin (kx) \right ) \\ &= {{a_0} \over 2} + {1 \over 2} \sum _{k = 1}^{\infty } \overline {\left ( a_k - i b_k \right )} \overline { \left ( \cos (kx) + i \sin (kx) \right ) }+ {1 \over 2} \sum _{k = 1}^\infty \left ( a_k - i b_k \right ) \left ( \cos (kx) + i \sin (kx) \right )\\ &= {{a_0} \over 2} + \Re \Big [ \sum _{k = 1}^\infty \left ( a_k - i b_k \right ) \left ( \cos (kx) + i \sin (kx) \right ) \Big ] \\ &= {{a_0} \over 2} + \sum _{k = 1}^\infty \left ( a_k \cos (kx) + b_k \sin (kx) \right ) \end {align*}

Remark: In treatments which avoid complex numbers, the orthogonality relations are proved directly for products of two sine, for products of a sin and a cosine, and for products of two cosines, and then Fourier’s argument involving integrating term by term is applied twice, once multiplying both sides by a sin, and once multiplying by a cosine.

Apply these formulas to the square wave defined by \(f(x) = -1\) for \(- \pi < x < 0\) and \(f(x) = 1\) for \(0 < x < \pi \).

Then each \(a_k = 0\) because \(f(x)\) is odd and \(\cos kx\) is even and the left and right integrals cancel. The \(b_k\) can be computed by integrating from \(0\) to \(\pi \) and multiplying by \(2\) since \(f\) and \(\sin kx\) are odd and so their product is even. We obtain \[b_k = {2 \over \pi } \int _0^\pi \sin kx \ dx = \left . {2 \over {k \pi }} \left (- \cos kx\right ) \right |_0^\pi = {2 \over {k \pi }} \left ( (-1)^{k + 1} + 1 \right )\]

So all terms are zero except \(b_k = {4 \over {k \pi }}\) for \(k\) odd. We conclude that \[f(x) = {4 \over \pi } \left (\sin x + {1 \over 3} \sin 3x + {1 \over 5} \sin 5x + \ldots \right )\] Substitute \(x = {\pi \over 2}\) in this formula to obtain \(1 = {4 \over \pi } \left (1 - {1 \over 3} + {1 \over 5} - \ldots \right )\) and thus Leibniz’ famous result \[{\pi \over 4} = 1 - {1 \over 3} + {1 \over 5} - \ldots \]

Below are the interactive experiments from the original Fourier project.

We can have bold type and italic text and stuff like that.

This is an example

And so is this

And also this

Sage is a fantastic project from the University of Washington. to provide an open source program able to do mathematical calculations and display the result. A server exists to perform Sage calculations over the internet. Thus none of the examples below require a local copy of Sage. These examples were copied from Sage documentation; see https://wiki.sagemath.org/

and also https://www.sagemath.orgHere is a lecture by John Maynard, who recently won a Fields Prize.

When \(a \ne 0\), there are two solutions to \(ax^2 + bx + c = 0\) and they are $$x = {-b \pm \sqrt{b^2-4ac} \over 2a}.$$

Also $$\int_0^\infty e^{- x^2} \ dx = {{\sqrt{\pi}} \over 2}$$ And \(\alpha, \beta, \Gamma^{i_1}_{j_1 j_2}\).Here is an entirely irrelevant link to the slides of a talk I once gave at Cal Poly Humboldt in Arcata, California: https://pages.uoregon.edu/koch/HumboldtTalk.pdf

PreTeXt is an entirely separate project by Robert Beezer at the University of Puget Sound. I like to mention this project because it is very well designed. In PreTeXt, documents are written in xml with mathematical formulas written in LaTeX. The software then converts this source to pdf, or html, or other formats.

Beezer has gathered together several authors, who converted their textbooks from LaTeX to PreTeXt, adding interactive elements. When these authors desired other interactions not in PreTeXt, Beezer added them to PreTeXt. Thus the project is driven by real-world requirements.

The only reason PreTeXt is in this document is that I’d like to demonstrate that the other method of getting interaction by writing separate web pages still works.

This is the Minimal example from the PreTeXt distribution. Interactive/Minimal/minimal.html

Dirichlet is one of my heroes. His wife was a sister of Mendelsohn, so there was a lot of music in his home. After Gauss convinced Riemann to drop his plans to get a theology degree and study mathematics, Gauss sent him to Berlin for two years to work with Dirichlet. Dirichlet had an enormous influence in all of Riemann’s work.

In number theory, Dirichlet proved that infinitely many primes end in each of 1, 3, 7, 9 when written in base 10, and proved the corresponding result for all other bases. This result had been used by Legendre in an attempted proof of the law of quadratic reciprocity, but Gauss complained that the result was considerably deeper than the theorem Legendre proved with it. But Dirichlet proved that considerably deeper result. In his paper, Dirichlet wrote “a proof of this result is desirable, particularly since Legendre has already used it.”

Dirichlet’s work on harmonic differential equations and what we now call the ”Dirichlet principle” was profound. And finally, in Fourier theory he proved the following crucial result:

Theorem 5 (Dirichlet) Suppose \(f(x)\) is a piecewise differentiable periodic function with period \(2 \pi \). Compute the \(a_k\) and \(b_k\) using Fourier’s formula; this is possible because \(f\) is certainly Riemann integrable. Then the Fourier series of \(f\) converges pointwise to \(f\). In particular, at discontinuities of \(f\) it converges to the average of the left and right limits of \(f\) at the singularity.

We want to prove that the partial sums \[\sum _{-N}^N c_k e^{ikx}\] converge pointwise to \(f(x)\).

The first step is to find a more useful formula for this partial sum. This is just algebra, and it is very straightforward. Inserting the definition of \(c_k\), the partial sum equals \[\sum _{-N}^N \left ({1 \over {2 \pi }} \int _{- \pi }^\pi f(t) e^{-ikt} \ dt\right ) e^{ikx} = {1 \over {2 \pi }} \int _{- \pi }^\pi f(t) \sum _{-N}^N e^{i k (x - t)} \ dt\]

Definition 2 The Dirichlet kernel function is defined by \[D_N(u) = \sum _{k = -N}^N e^{iku}\]

Remark: It follows that \[\sum _{-N}^N c_k e^{ikx} = {1 \over {2 \pi }} \int _{- \pi }^\pi f(t) D_N(x - t) \ dt\] and we want to prove that as \(N \rightarrow \infty \), this expression has limit \(f(x)\).

The second step is also algebra but the algebra is a little harder. Working with complex exponents makes the calculation easier.

Theorem 6 \[D_N(u) = {{\sin \left (N + {1 \over 2} \right )u } \over {\sin {u \over 2}}}\]

Proof: Multiply \(D_N(u)\) by \(e^{i {u \over 2}}\) and \(e^{-i {u \over 2}}\) to obtain the following expressions:

\[D_N(u) e^{i {u \over 2}} = \sum _{-N}^N e^{i \left (k + {1 \over 2}\right )u}\] \[D_N(u) e^{- i {u \over 2}} = \sum _{-N}^N e^{i \left (k - {1 \over 2}\right )u}\] Then subtract to get \[D_N(u) \left (e^{i {u \over 2}} - e^{- i {u \over 2}} \right ) = \sum _{-N}^N\left ( e^{i \left (k + {1 \over 2}\right )u} - e^{i \left (k - {1 \over 2}\right )u} \right ) \] But in the sum on the right, most of the terms cancel. Indeed, \(e^{i \left (k + {1 \over 2}\right )u} - e^{i \left ( (k + 1) - {1 \over 2} \right )} = 0\), so the \(k\)th term with positive sign cancels the \((k + 1)\)th term with negative sign. The only terms which do not cancel are the \(N\)th term with positive sign and the \((-N)\)th term with negative sign. We obtain \[D_N(u) \left (e^{i {u \over 2}} - e^{- i {u \over 2}} \right ) = e^{i \left ( N + {1 \over 2} \right )u} - e^{- i \left ( N + {1 \over 2} \right ) u}\] However, for any \(x\) we have \(e^{ix} - e^{-ix} = \left ( \cos x + i \sin x \right ) - \left ( \cos x - i \sin x \right ) = 2 i \ \sin x.\) So the previous formula can be rewritten \[D_N(u) \ 2i \ \sin {u \over 2} = 2 i \ \sin \left (N + {1 \over 2} \right ) u\] and the theorem immediately follows.

Our conclusion can written in a form which doesn’t mention complex exponents. From section six we learn that \[{{a_0} \over 2} + \sum _{k = 1}^N \left ( a_k \cos kx + b_k \sin kx \right ) = \sum _{k = -N}^N c_k e^{ikx}\] and from sections 7 and 8 we learn that \[\sum _{k = -N}^N c_k e^{ikx} = {1 \over {2 \pi }} \int _{- \pi }^\pi f(t) D_N(x - t) \ dt = {1 \over {2 \pi }} \int _{- \pi }^\pi f(t) {{\sin \left (N + {1 \over 2}\right )(x - t)} \over {\sin {{x - t} \over 2}}} \ dt \] This proves

Theorem 7 \[{{a_0} \over 2} + \sum _{k = 1}^N \left ( a_k \cos kx + b_k \sin kx \right ) = {1 \over {2 \pi }} \int _{- \pi }^\pi f(t) {{\sin \left (N + {1 \over 2}\right )(x - t)} \over {\sin {{x - t} \over 2}}} \ dt \]

The picture below shows the Dirichlet kernel when \(N = 50\).

Notice the very large peak at the origin. This is caused because both the numerator and the denominator of our formula for the Dirichlet kernel vanish at the origin. But for small \(x\), \(\sin x \sim x\). So \[{{\sin \left ( N + {1 \over 2} \right ) (x - t)} \over {\sin {{x - t} \over 2}}} \sim {{\left ( N + {1 \over 2} \right ) (x - t)} \over { {{x - t} \over 2}}}= 2N + 1\]

Everywhere else the kernel is small and oscillating. Indeed, if we stay away from the origin, then the denominator \(\sin {u \over 2}\) stays away from zero, and so the absolute value of the kernel is a multiple of \(\sin \left ( N + {1 \over 2}\right )u\), which oscillates rapidly.

When we compute the expression \[ {1 \over {2 \pi }} \int _{- \pi }^\pi f(t) {{\sin \left (N + {1 \over 2}\right )(x - t)} \over {\sin {{x - t} \over 2}}} \ dt \] we must separate the integral into two pieces. The first piece will integrate over \(t\) very close to \(x\), where we have a peak. As \(N \rightarrow \infty \), the peak becomes narrower and narrower, sending this integral to zero, but simultaneously the peak gets higher and higher, sending the integral to infinity. We will show that a sort of compromise occurs and the actual integral over this region approaches \(f(x)\).

But everywhere else, there are no peaks and we are integrating a fairly level function multiplied by a very rapidly oscillating function. When we integrate such a product, the negative regions and positive regions will tend to cancel out and the resulting integral should be very small.

Our job is now to make this intuition rigorous. We first handle integrating over \(t\) very close to \(x\), where the peak matters.

Theorem 8 \[{1 \over {2 \pi }} \int _{- \pi }^\pi D_N(t)\ dt = 1\]

Proof: \[{1 \over {2 \pi }} \int _{- \pi }^\pi D_N(t)\ dt = \sum _{-N}^N {1 \over {2 \pi }} \int _{- \pi }^\pi e^{ikt} \ dt\] By the orthogonality relations, all of the terms in the sum on the right vanish except the term when \(k = 0\), which equals one.

Theorem 9 If \(f(x)\) is piecewise continuous, \[f(x) = {1 \over {2 \pi }} \int _{- \pi }^\pi f(x) {{\sin \left (N + {1 \over 2}\right )(x - t)} \over {\sin {{x - t} \over 2}}} \ dt\]

Proof: Notice that we are integrating with respect to \(t\), so \(f(x)\) is a constant. Consequently we can pull it out of the integral and cancel it from both sides. So it suffices to prove that \[1 = {1 \over {2 \pi }} \int _{- \pi }^\pi {{\sin \left (N + {1 \over 2}\right )(x - t)} \over {\sin {{x - t} \over 2}}} \ dt\] The right hand side is just the Dirichlet kernel, so it suffices to prove that \[1 = {1 \over {2 \pi }} \int _{-\pi }^\pi D_N(x - t)\ dt\] Notice first that \[1 = \int _{- \pi }^\pi D_N(t) \ dt = \int _{- \pi }^\pi D_N(-t) \ dt\] because \(D_N(t) = \sum _{-N}^N e^{ikt}\) is symmetric across the \(y\)-axis. Notice next that \(D_N(x - t)\) is just a translation of \(D_N(-t)\). But \(D_N(-t)\) is periodic of period \(2 \pi \) because each \(e^{ikt}\) is periodic. If a function is periodic of period \(2 \pi \), the integral of the function from \(- \pi \) to \(\pi \) equals the integral of any translation of the function from \(-\pi \) to \(\pi \). QED.

Theorem 10 If \(f(x)\) is piecewise continuous, \[f(x) - \left ({{a_0} \over 2} + \sum _{k = 1}^N \left ( a_k \cos kx + b_k \sin kx \right )\right ) = {1 \over {2 \pi }} \int _{- \pi }^\pi \Big ( f(x) - f(t)\Big ) {{\sin \left (N + {1 \over 2}\right )(x - t)} \over {\sin {{x - t} \over 2}}} \ dt\]

Proof: This follows immediately from theorems 7 and 9. QED.

Theorem 11 If \(f(x)\) is piecewise continuous, \[f(x) - \left ({{a_0} \over 2} + \sum _{k = 1}^N \left ( a_k \cos kx + b_k \sin kx \right )\right ) = {1 \over {2 \pi }} \int _{- \pi }^\pi \Big ( f(x) - f(x + u)\Big ) {{\sin \left (N + {1 \over 2}\right )u} \over {\sin {{u \over 2}}}} \ du\]

Proof: Make the change of variable \(u = t - x\) in the previous formula. Notice that \(t = x + u\) and \(dt = du\). Using the identity \(\sin (-u) = - \sin u\), the previous result becomes the following: \[f(x) - \left ({{a_0} \over 2} + \sum _{k = 1}^N \left ( a_k \cos kx + b_k \sin kx \right )\right ) = {1 \over {2 \pi }} \int _{- \pi - x}^{\pi - x} \Big ( f(x) - f(x + u)\Big ) {{\sin \left (N + {1 \over 2}\right )u} \over {\sin {{u \over 2}}}} \ du\] Every function of \(u\) in the integral on the right is periodic; in particular this holds for the Dirichlet kernel since it is a sum of periodic expressions. Consequently we can translate the limits of integration without changing the result. QED.

Remark: The final result indeed handles the peak, for the following reason. We now have an extra \(f(x)\) on the left side, so instead of proving that both expressions converge to \(f(x)\), we want to prove that both expressions converge to zero.

In the integral on the right, instead of \(f(x + u)\) we have \(f(x) - f(x + u)\), which vanishes at the peak where \(u = 0\). This has the effect of cancelling out the influence of the peak, and now we only have to show that the integral goes to zero as \(N\) increases.

We begin by reorganizing the right side of the previous formula. It equals \[ {1 \over {2 \pi }} \int _{- \pi }^\pi \left ( {{f(x) - f(x + u)} \over {u}}\right ) \left ( {{u} \over {\sin {{u} \over 2}}} \right ) \left [\cos {u \over 2} \sin Nu + \sin {u \over 2} \cos Nu \right ] \ du\] If we let \[g(u) = \left ( {{f(x) - f(x + u)} \over {u}}\right ) \left ( {{u} \over {\sin {{u} \over 2}}} \right ) \cos {u \over 2}\] and \[h(u) = \left ( {{f(x) - f(x + u)} \over {u}}\right ) \left ( {{u} \over {\sin {{u} \over 2}}} \right ) \sin {u \over 2}\] then our final formula is \[f(x) - \left ({{a_0} \over 2} + \sum _{k = 1}^N \left ( a_k \cos kx + b_k \sin kx \right )\right ) = {1 \over {2 \pi }} \int _{- \pi }^\pi g(u) \sin Nu \ du + {1 \over {2 \pi }} \int _{- \pi }^\pi h(u) \cos Nu \ du\]

Remark: We are ready to describe the key step in Dirichlet’s proof. Fix a point \(x\) where \(f\) is differentiable. Since our function is piecewise differentiable, \(f\) will be differentiable at almost every point, so the argument we now give will usually work. We want to prove that the above formula converges to zero as \(N \rightarrow \infty \).

But notice that the functions \(g(u)\) and \(h(u)\) are piecewise continuous. Indeed, each of these expressions is a product of three terms. The first is piecewise differentiable (and thus piecewise continuous) except possibly when \(u = 0\). However, the limit of the expression as \(u \rightarrow 0\) exists because \(f\) is differentiable at \(x\). So the first term is continuous at \(u = 0\).

The second term is well-behaved except at \(u = 0\), but a famous result from calculus says that it also has a limit as \(u\) approaches zero and thus is continuous there.

So the right side of the previous formula is just the sum of the Fourier coefficients \(b_N\) for \(g\) and \(a_N\) for \(h\), where \(g\) and \(h\) are piecewise continuous.

This leads us to the final step in Dirichlet’s proof.

In this section, we will use very different ideas to prove the following result:

Theorem 12 (Riemann-Lebesque) Suppose \(f(x)\) is a piecewise continuous function on \([- \pi , \pi ]\). Let \(a_n\) and \(b_n\) be the Fourier coefficients of \(f\). Then \(a_n \rightarrow 0\) and \(b_n \rightarrow 0\) as \(n\) approaches infinity.

Remark: From our previous work, this proves Dirichlet’s theorem at all points where \(f(x)\) is differentiable.

Remark: The rough idea of the proof is illustrated by the picture at the top of the next page. The Fourier coefficients are obtained by multiplying \(f\) by a rapidly oscillating function, and then integrating the product. But this product will contain many positive and negative spikes whose integrals will almost cancel each other.

The actual proof we give is more abstract; it feels like linear algebra rather than than analysis.

Proof: We will work over the complex numbers and thus prove that \(c_n \rightarrow 0\) as \(n \rightarrow \infty \). Since \(c_n = {1 \over 2} (a_n - i b_n)\), this will prove the result.

Step 1 of proof: Let \(V\) be the set of all piecewise continuous complex-valued functions defined on the interval \([ - \pi , \pi ]\). If \(f(x) \in V\), then \(f\) is continuous except at finite jumps. At such a point of discontinuity \(x_0\), the limit from the left \(L\) of \(f(x)\) exists as \(x\) approaches \(x_0\). Similarly the limit from the right \(R\) of \(f(x)\) exists as \(x\) approaches \(x_0\). We require that \(f(x_0) = {{L + R} \over 2}\).

Clearly we can add elements of \(V\) and multiply them by complex scalars. It is easy to verify that these operations make \(V\) into a complex vector space.

Now define an inner product on this space by writing \[< f, g > = {1 \over {2 \pi }} \int _{- \pi }^\pi f(x) \overline {g(x)} \ dx\] It is easy to prove that this satisfies all the requirements of a Hermitian inner product on \(V\):

\(<f, g>\) is linear in \(f\) and antilinear in \(g\)

\(<f, g> = \overline {<g, f>}\)

\(<f, f>\) is real and greater than or equal to zero

\(<f, f> = 0\) if and only if \(f\) is identically zero,

The hardest of these results is the final one. Since \(f\) is piecewise continuous, it is easy to see that if \(<f, f> = 0\), then \(f\) is identically zero on each subinterval of continuity, so we only have to worry about the points where \(f\) is not continuous. But our condition on the value of \(f\) at jump points then guarantees that \(f\) is also zero at these points.

Step 2 of proof: If \(f \in V\), notice that its Fourier coefficient \(c_k\) is given by \(c_k = <f, e^{ikx}>\). Notice also that the \(e^{ikx}\) are orthonormal in \(V\). By the next to last property of our inner product, the following expression is real and greater than or equal to zero: \[ <f - \sum _{-N}^N c_k e^{ikx}, f - \sum _{-N}^N c_k e^{ikx}>\] Expanding out, this expression equals \[<f, f> - \sum c_k<e^{ikx}, f> - \sum \overline {c_k} <f, e^{ikx}> + \sum c_k \overline {c_l} <e^{ikx}, e^{ilx}> = \] \[<f, f> - \sum c_k \overline {<f, e^{ikx}>} - \sum \overline {c_k} <f, e^{ikx}> + \sum c_k \overline {c_l} <e^{ikx}, e^{ilx}> = \] \[<f, f> - \sum c_k \overline {c_k} - \sum \overline {c_k} c_k + \sum c_k \overline {c_k}\] In this last step, we applied the orthogonality relations.

The three terms at the end are the same, and \(c_k \overline {c_k} = \left | c_k \right |^2\), so the expression \(<f, f> - \sum _{-N}^N \left | c_k \right |^2\) is greater than or equal to zero. This is a famous result due to Bessel:

Theorem 13 (Bessel’s Inequality) Suppose \(f(x)\) is piecewise continuous on \([- \pi , \pi ]\) and has complex Fourier coefficients \(c_k\). Then for any finite \(N\) we have \[\sum _{k = - N}^N |c_k|^2 \le {1 \over {2 \pi }} \int _{- \pi }^\pi |f(x)|^2\ dx\]

Corollary 14 If \(f(x)\) is as above with Fourier coefficients \(c_k\), then \(c_k \rightarrow 0\) as \(|k| \rightarrow \infty \).

Remark: This proves the Riemann-Lebesgue lemma, and therefore it proves Dirichlet’s theorem at all points \(x\) where \(f\) is differentiable.

Suppose next that \(x\) is a point where \(f\) is continuous, but not differentiable. The following periodic function has such points:

The previous argument still works with just a minor patch. The problem is the term \[\left ( {{f(x) - f(x + u)} \over {u}}\right )\] in both \(g\) and \(h\) in our previous argument. The limit of this expression as \(u \rightarrow 0\) exists from the left and from the right, but the two limits are not equal. It follows that \(g\) and \(h\) have finite jumps at the origin. But both are still piece-wise continuous, and the argument proceeds as before. QED.

To complete the proof of Dirichlet’s theorem, it suffices to handle the case of a finite jump. In this case we want to prove that the Fourier series converges to the average of the left and right values at the jump. The proof involves an easy trick. We earlier calculated the Fourier series of a square wave, and can directly verify the result in this case. If we have a function \(f\) with a finite jump, we can add an appropriate multiple of the square wave to make the sum continuous at \(x\). Dirichlet’s theorem has already been proved for this sum. The required result immediately follows for \(f\).

Here are the details.

The square wave \(S(x)\) has a jump at the origin. It’s value from the left is \(-1\) and its value from the right is \(1\) and their average is zero. The Fourier series of this wave is \[{4 \over \pi } \left ( \sin x + {1 \over 3} \sin 3x + {1 \over 5} \sin 5x + \ldots \right )\] and the value of this series at the origin is indeed zero. So Dirichlet’s theorem is true in this special case.

We can translate the square wave so the jump is at \(x_0\) by writing \(S(x - x_0)\). The corresponding Fourier series can be obtained by translating each \(\sin kx\) by the same amount: \[\sin k(x - x_0) = \sin (kx - k x_0) = \sin (kx) \cos (k x_0) - \cos (kx) \sin (k x_0)\] So Dirichlet’s theorem is still true for the translated function.

Suppose a different function \(f(x)\) has a jump at \(x_0\) with jump amount \(J\). Here \(f(x_0^-) = L\) and \(f(x_0^+) = R\) and \(J = R - L\). Then \(f(x) - {J \over 2} S(x - x_0)\) has no jump at \(x_0\). It’s value at \(x_0\) can be computed from either the left or the right, and from the right we get \[f(x_0^+) - {J \over 2} = R - {{R - L} \over 2} = {{R + L} \over 2}\] By the theorem already proved, the Fourier series of \(f(x) - {J \over 2} S(x - x_0)\) converges at \(x_0\) to the value of the function there and thus to \({R + L} \over 2\). But this Fourier series is just the Fourier series of \(f\) minus \(J \over 2\) times the Fourier series of \(S(x - x_0)\). We already know that this second series converges to zero at \(x_0\), so the series of \(f(x)\) must converge to \({R + L} \over 2\). QED.